[ad_1]

Whether you know it or not, if you‘ve been on the internet, you’ve interacted with an API. Application program interfaces (APIs) are sets of protocols that allow software applications to communicate with each other. If your site has lots of traffic, many people may be interacting with your API. So how do you make usage fair? API rate limiting can help.

Savvy web developers set limits on the number of requests their APIs can handle from users. This improves the user experience and can protect your application from malicious attacks.

Let’s take a deeper look into what API rate limits are all about.

Table of Contents

What is API rate limiting?

API rate limiting is a set of measures put in place to help ensure the stability and performance of an API system. It works by setting limits on how many requests can be made within a certain period of time — usually a few seconds or minutes — and what actions can be taken.

If too many requests are made over that period, the API system will return an error message telling you that the rate limit has been exceeded.

Why does rate limiting exist?

API rate limiting exists to protect both the stability and performance of an API. Rate limiting ensures that the API isn’t overwhelmed with requests from users which could lead to downtime or slow responses.

Additionally, APIs often need to be protected from malicious actors. This can prevent denial-of-service attacks that can overwhelm your system.

The Importance of API Rate Limiting

API rate limiting helps to ensure the performance and stability of an API system. You can avoid downtime, slow responses, and malicious attacks. Additionally, rate limiting can prevent accidental or unintentional misuse of an API. This helps mitigate security risks or data loss.

By implementing rate limiting, businesses are able to protect their valuable resources and data — all while providing reliable performance for their users.

Additionally, rate limiting can help businesses save on costs associated with managing an API system. You can prevent unnecessary requests from being processed, which saves money.

[Video: What are the different API rate limiting methods needed while designing large scale systems & why?]

What does “API rate limit exceeded” mean?

“API Rate Limit Exceeded” is a warning message that appears when the number of requests a user exceeds what the system permits. Generally, this means that too many requests were sent to the API in too short of a time frame. Some or all requests will not be processed. The user will need to wait for the rate limit to reset or try again later.

Now, let’s explore best practices to keep in mind when setting rate limits.

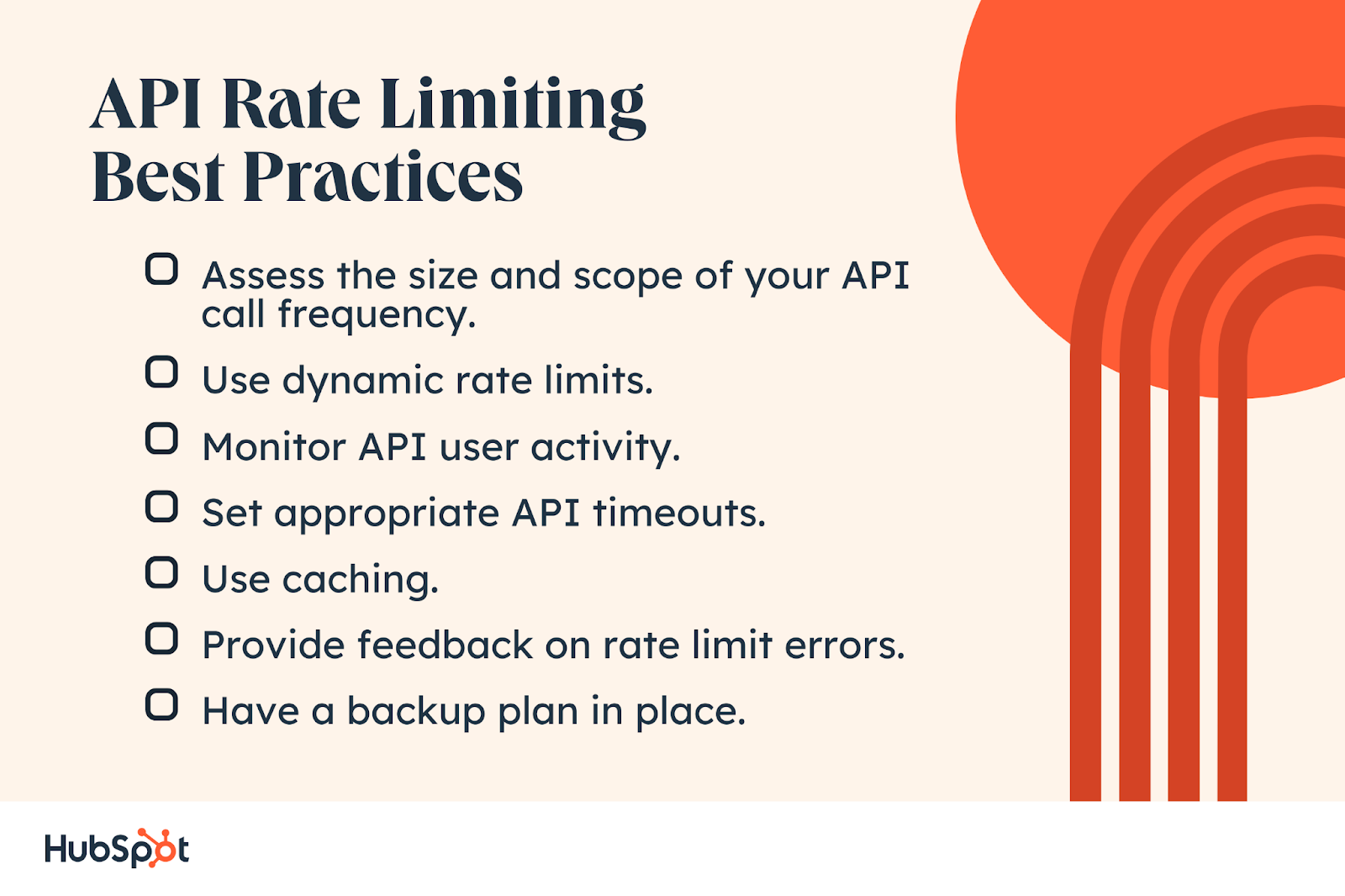

API Rate Limiting Best Practices

1. Assess the size and scope of your API call frequency.

Analyze what type of traffic you’re receiving and what is reasonable when it comes to rate limiting. APIs often have usage limits or pricing tiers based on call frequency. By understanding the scope of your API call frequency, you can optimize your usage. This helps users stay within the allocated limits and avoid unnecessary additional costs.

Making frequent API calls can impact the performance and responsiveness of your application. Assessing size allows you to identify potential bottlenecks and optimize your code accordingly.

2. Use dynamic rate limits.

Analyze what type of traffic you’re receiving and what is reasonable when it comes to rate limiting. APIs often have usage limits or pricing tiers based on call frequency. By understanding the scope of your API call frequency, you can optimize your usage. This helps users stay within the allocated limits and avoid unnecessary additional costs.

Making frequent API calls can impact the performance and responsiveness of your application. Assessing size allows you to identify potential bottlenecks and optimize your code accordingly.

3. Monitor API user activity.

When approaching API limiting, keep track of who is using your API and what they’re doing. This can help you identify any potential abuse or people trying to overload your API. Keep track of what rate limits have been set, what requests have been made, and what response times have been experienced.

If you want to go above and beyond, create a list of trusted users or services that are allowed to exceed the standard rate limit.

4. Set appropriate API timeouts.

Setting reasonable API timeouts ensures a better user experience for your application’s end-users. If an API call takes too long to respond, it can lead to frustration and abandonment of the application by users. By setting appropriate timeouts, you can prevent long waiting periods and provide a more responsive experience.

5. Use caching.

Caching allows you to store frequently accessed API responses from a cache, rather than processing them every time a request is made. This can significantly improve API response times and reduce latency for users. By retrieving data from the cache, you can deliver faster and more responsive API experiences.

6. Provide feedback on rate limit errors.

Rate limit errors occur when API consumers exceed the allowed number of requests within a certain timeframe. By providing feedback on rate limit errors, API providers can clearly communicate that consumers have reached the limit. This promotes transparency and helps users understand why their requests are being restricted.

7. Have a backup plan in place.

Establish what will happen if your rate limits are breached so you can still maintain some level of performance during high-traffic periods.

By establishing what will happen if rate limits are breached, organizations can take proactive measures to mitigate the impact. This may involve queuing, throttling, or caching to handle excessive traffic. A plan also allows businesses to allocate resources effectively, ensure efficient use of infrastructure, and prioritize critical operations.

API Rate Limiting Examples

1. Facebook

Facebook uses API limiting to control the rate at which third-party developers can access its platform’s resources. This practice ensures the stability, security, and fairness of the Facebook ecosystem, while providing a good user experience.

For example, Facebook sets predefined limits on the number of API requests that can be made per user within a specified time interval. These limits can vary based on factors such as the type of API endpoint, the level of API access, and the user’s privacy settings. By enforcing rate limits, Facebook prevents excessive usage and potential abuse.

2. Twitter

Twitter dynamically adjusts rate limits based on usage patterns, system load, and other factors. This allows Twitter to adapt to changing conditions and allocate resources effectively. If an application maintains good behavior and does not violate the rate limits, it may earn higher allowances over time.

Further, when an API request exceeds the allotted limit, Twitter responds with an error code to indicate the limit has been reached.

[Video: What Does Rate Limit Exceeded Mean On Twitter]

3. Google Maps

By implementing API limiting strategies, Google Maps ensures that its platform remains accessible and available for all its users and developers.

Google provides a Developer Console and dashboard for managing API projects and monitoring usage. Developers can track their API usage, view quotas and limits, and receive usage reports and alerts. The console also allows developers to analyze API performance metrics to optimize their applications.

Some Google Maps API services may have associated costs and usage-based billing. In these cases, API limiting plays a role in managing usage to prevent unexpected charges and ensure compliance with billing plans and agreements. Developers may also have the option to purchase additional quota or credits to extend their API usage beyond the free limits.

How to Implement API Rate Limiting

There are many methods that you can use to enact API rate limiting. Let’s discuss some of the most popular methods.

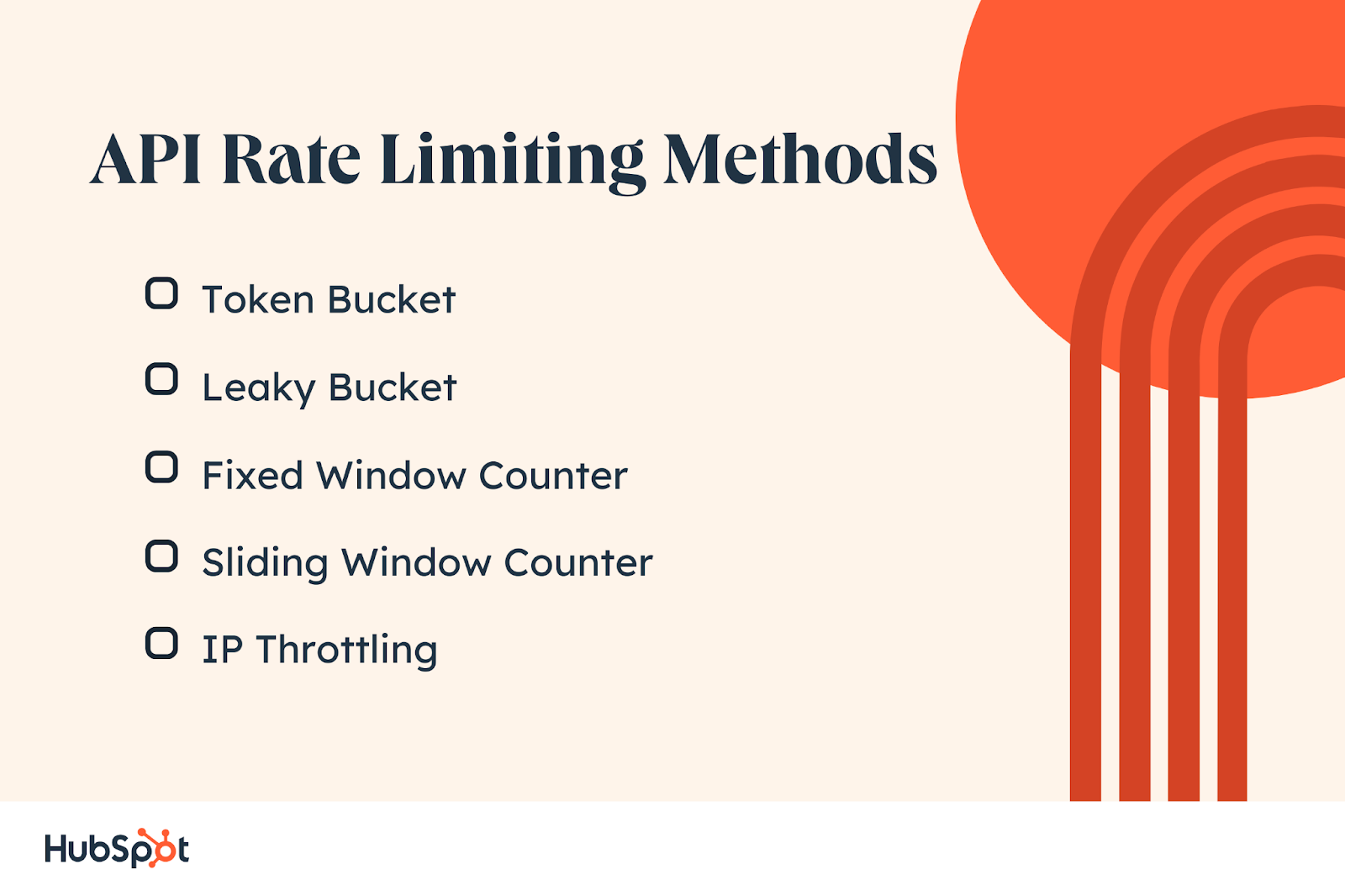

1. Token Bucket

This rate-limiting method works by restricting the number of requests a user can make in a given amount of time by assigning each user a “token bucket” of requests they are allowed to send.

When a user sends a request, it is deducted from the token bucket and replenished when the period ends. This method is best for protecting against high-volume requests that could otherwise overwhelm the system.

2. Leaky Bucket

This rate-limiting method works by assigning each user a “leaky bucket” of requests they are allowed to send.

When a request is sent, it deducts from the bucket, and if too many requests are sent in a given period of time, the bucket will leak, resulting in requests being denied. This method is best for protecting against bursts of requests that could otherwise overwhelm the system.

3. Fixed Window Counter

This rate-limiting method works by limiting the number of requests a user can send in a fixed time window (such as a day or hour). When the time window has elapsed, the counter resets, and the user can continue to send requests.

This method is best for protecting against sustained high-volume requests that could otherwise overwhelm the system.

4. Sliding Window Counter

This rate-limiting method works by limiting the number of requests a user can send in a sliding time window (such as a minute). When the time window has elapsed, the counter is reset based on the time passed, and any additional requests are denied.

This method is best for protecting against rapid bursts of requests that could otherwise overwhelm the system.

5. IP Throttling

This rate-limiting method works by limiting the number of requests a single IP address can send. When the limit is reached, any additional requests from that IP are denied until the limit has been reset. This method is best for protecting against automated bots and malicious actors sending unauthorized requests.

All of these rate-limiting methods help protect systems from abuse and ensure their performance remains consistent by giving users just enough access to make use of the system without overwhelming it. They are essential for maintaining some level of security and performance even during high-traffic periods.

To put it simply, an API rate limit is a set of rules that limits how often a user or application can interact with an API in order to maintain its performance and protect it from abuse.

It is important to consider what type of rate limit you need in order to ensure that your system remains secure and responsive. Understanding what an API rate limit is and how it works will help you make the right choice for your application or service.

Getting Started

By understanding what an API rate limit does, you can better protect your systems from abuse and ensure their performance remains consistent. With the right rate limit in place, you can make sure your users get what they need without putting too much strain on your system.

This article was written by a human, but our team uses AI in our editorial process. Check out our full disclosure to learn more about how we use AI.

[ad_2]

Source link

![API Rate Limiting — Everything You Need to Know Download Now: How to Use an API [Free Ebook]](https://no-cache.hubspot.com/cta/default/53/1cbd6f4c-4566-446c-852a-0a9dae73e987.png)